Auto logout in seconds.

Continue LogoutRead Advisory Board's take: The potential benefits—and downsides—of chatbots for providers.

When a person reaches out to Sara to cope with stress and anxiety, she might inquire about how they're spending their free time. From there, she might suggest some relaxing activities, such as reading a book or taking a bath.

Sara might remind you of a supportive peer or even a professional therapist, but she's neither: She's a chatbot, developed by San Francisco-based startup X2AI, to offer mental health support for $5 a month, Daniella Hernandez reports for the Wall Street Journal.

Researchers increasingly are examining whether artificial intelligence (AI) and other virtual tools, such as chatbots, can help deliver services and complement interactions with therapists or other providers.

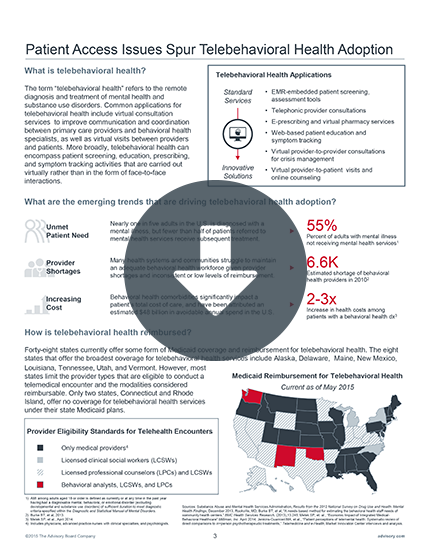

Data from the National Institute of Mental Health show less than half of the 20% of U.S. adults who have a mental health condition receive treatments. Researchers hope that this new wave of digital tools can help close that gap and increase patients' access to mental health services.

Inside a chatbot consultation

According to the Journal, some of these products are already available on the market.

For example, Sara, X2AI's chatbot, offers 24-7 support to users for $5 a month. To engage with Sara, users must send her an initial text. Hernandez shares a recent exchange she had with the chatbot after she texted Sara about feeling tired at work:

"How much time do you give yourself to de-stress before getting ready for bed?" Sara askes in reply?

"Half an hour on a good night," Hernandez texts back.

"What do you do to calm down or relax?" Sarah asks.

"I watch crappy tv or read," Hernandez replies.

"If you need more ideas, try reading a relaxing novel, meditating, or taking a soothing bath," Sara suggests. "Sound good?"

According to Hernandez, Sara's interactions "are encouraging and empathetic—if occasionally robotic."

Another app, Hernandez writes, uses a chubby penguin named Wysa to teach adolescents how to adapt to stress using cognitive behavioral therapy, meditations, and mindfulness techniques that can be helpful in a self-help context.

Wysa co-founder Ramakant Vempati described Wysa as a wellness app and said it is not intended to serve as a medical intervention. According to Vempati, most of the app's 400,000 users are younger than 35. There have been more than 20 million interactions with the chatbot, Vempati said.

Meanwhile, Gale Lucas, a human-computer interaction researcher at the University of Southern California, and her team of researchers at USC's Institute for Creative Technologies are developing a voice-based chatbot named Ellie designed to help veterans with post-traumatic stress disorder (PTSD). Ellie is a computer-generated virtual woman sitting at a desk who will appear on screen to ask veterans questions about their military experience and traumatic experiences they may have experienced. For instance, Ellie will ask veterans whether they experience sexual assault or faced other trauma.

The chatbot named Ellie is intended to help clinicians determine which patients could benefit from speaking with a human. "This is not a doc in a box. This is not intended to replace therapy," Lucas said.

How users might benefit

Lucas said some of the benefits of chatbots are clear. Some people, he said, might be more willing to open up to a chatbot than a therapist about anxiety, depression, or PTSD, because they are less likely to feel judged or stigmatized.

Beth Jaworski, a mobile apps specialist at the National Center for PTSD in Menlo Park, California, cautioned that there is not yet research to show whether the tools can help people who have serious conditions such as major depression.

In some cases, the technology has shown some clear limitations, such as difficulty understanding context or interpreting emotions. For example, Sister Hope—a no-cost faith-based chatbot developed by the San Francisco-based startup X2AI and available on Facebook—could not understand a user when she complained about the San Francisco weather.

When interacting with Sister Hope, Hernandez saw the app wasn't following the conversation.

"I hate the weather here in San Francisco," Hernandez wrote. "It's so cold."

To that, Sister Hope replied, "I see, tell me more about what happened."

Hernandez elaborated by typing, "I did not bring a jacket. It's the middle of summer ... but you need a parka to stay warm here."

But Sister Hope wasn't following. "It sounds like you're feeling pleased, do you want to talk about this?" the bot replied.

Angie Joerin, X2AI's director of psychology, said compassion and empathy are another challenge. Joerin said developers at X2AI train chatbots to be compassionate and empathetic toward users experiencing anxiety, loneliness, suicidal thoughts, or other issues by using transcripts from mock counseling sessions.

Privacy is also a concern among clinicians who worry companies might collect mental health information, which is sensitive data, the Journal reports. According to the Journal, Wysa said it does not collect identifiable data from users and X2AI said it collects minimal data from users.

According to the Journal, researchers are testing ways to engage people more effectively using chatbots, and they are making progress as AI improves and they address the technical shortcomings of the technology—such as language comprehension (Hernandez, Wall Street Journal, 8/9).

Advisory Board's take

Peter Kilbridge, Senior Research Director and Andrew Rebhan, Consultant, Health Care IT Advisor

Peter Kilbridge, Senior Research Director and Andrew Rebhan, Consultant, Health Care IT Advisor

Mental health care is undergoing a technological sea change. Providers are building telepsychiatry programs to improve patient access to specialists, using virtual reality for exposure therapy, and even creating AI-enabled robotic pets to combat social isolation.

Amid this wave of emerging technologies, chatbots have attracted particular interest, with proponents suggesting they can provide patients with self-help resources (e.g., medication reminders, tracking stress patterns), assessments (e.g., improving memory), and illness management assistance (e.g., provider-directed therapy, social support networks).

Chatbot technologies have a number of appealing features, including:

- they can act as a supplement to traditional in-person therapy, alleviating workloads for specialists;

- they provide greater access to care at a lower cost;

- they offer convenience, given their 24/7 availability;

- they can be scaled quickly; and

- they offer a venue for people to share potentially sensitive information anonymously without fear of judgment.

That said, the technology is still in its early stages, and major obstacles remain to adoption (including technical functionality, clinical efficacy and user acceptance). So what should providers expect as chatbots continue to evolve and gain traction? Here are three key takeaways:

- Expect bumps along the way—especially as chatbots learn to display empathy and use natural language. In the future, artificial emotional intelligence may enable AI to uncover signs of depression, anxiety, or other emotive states. Vendors such as Beyond Verbal and Affectiva, for example, are using AI to analyze humans' verbal and non-verbal cues, including facial expressions and gestures. We're also seeing growing sophistication of natural language processing in virtual assistants. Still, all of these technologies are in their early phases, so providers shouldn't expect that they will be ready to adopt anytime soon.

- AI will continue to supplement, rather than replace, human expertise. Chatbots won't replace clinicians anytime soon. Providers should rely on mobile apps, virtual assistants, and other AI tools to augment the work of a human therapist (e.g., reinforcing therapeutic lessons in between visits) and help close gaps in care.

- Always implement strict quality controls. The AI market is at peak hype phase, meaning there are thousands of mental health apps flooding the market. It will take time to ensure that proposed solutions have undergone rigorous clinical evaluation. The ideal tools in this arena will employ validated evidence-based cognitive techniques, offer intuitive design that sustains user engagement, and ensure data privacy and security. That's an ideal that many of today's chatbots fail to meet.

To learn more about emerging technologies in digital health, view our cheat sheets on artificial intelligence, the Internet of Things, digital health systems and more.

Don't miss out on the latest Advisory Board insights

Create your free account to access 1 resource, including the latest research and webinars.

Want access without creating an account?

You have 1 free members-only resource remaining this month.

1 free members-only resources remaining

1 free members-only resources remaining

You've reached your limit of free insights

Become a member to access all of Advisory Board's resources, events, and experts

Never miss out on the latest innovative health care content tailored to you.

Benefits include:

You've reached your limit of free insights

Become a member to access all of Advisory Board's resources, events, and experts

Never miss out on the latest innovative health care content tailored to you.

Benefits include:

This content is available through your Curated Research partnership with Advisory Board. Click on ‘view this resource’ to read the full piece

Email ask@advisory.com to learn more

Click on ‘Become a Member’ to learn about the benefits of a Full-Access partnership with Advisory Board

Never miss out on the latest innovative health care content tailored to you.

Benefits Include:

This is for members only. Learn more.

Click on ‘Become a Member’ to learn about the benefits of a Full-Access partnership with Advisory Board

Never miss out on the latest innovative health care content tailored to you.